Why AI Startups Trust Third-Party Reviews to Scale Up

Launching an AI startup can feel like standing at the edge of a deep pool. The code may be clever, the training data rich, and the demo dazzling, yet founders still wonder whether people will trust what they have built. Before pouring more time and money into new hires, bigger clouds, and global marketing, most young companies seek an outside signal that says, “Yes, this product works for real users.”

For that reason, third-party review sites have become a secret weapon. A single page of authentic feedback can highlight strengths, expose blind spots, and calm the nerves of cautious investors. While browsing feedback, many founders stumble upon resources such as seoelinks reviews, where practitioners share blunt stories about search visibility tools. Reading how another team handled growth pains gives founders a mirror for their own roadmap. The habit of studying neutral opinions early, and often, can shape smarter scaling moves down the road.

Why Early Validation Matters for AI Startups

AI products behave differently from ordinary software. They learn from data, change over time, and sometimes surprise even their own makers. That unpredictability makes outside validation more important than slick branding. Third-party reviews offer validation because they come from people with nothing to lose by being honest.

If a sentiment-analysis API mislabels sarcasm, a reviewer will say so. If a computer-vision app saves a warehouse thousands of dollars, the reviewer will brag about it. Patterns inside these stories help founders detect whether their minimum viable model is truly ready for a wider audience or still too fragile for scale.

Data scientists inside a seven-person startup already juggle model tuning, infrastructure costs, and customer questions. Digging through independent reviews may feel like extra work, yet it saves time in the long run. Teams can spot recurring complaints, fix them early, and prevent a flood of support tickets later. In short, early validation through reviews offers a low-cost, high-resolution snapshot of real-world performance.

How Third-Party Feedback Eases Investor Doubt

Pitch decks often swirl with technical jargon: attention heads, differential privacy, reinforcement learning. While investors appreciate cutting-edge terms, what they really want is proof that the market cares. Independent reviews give that proof in a language anyone can understand. A five-star rating that mentions “cut onboarding time in half” speaks louder than a dozen colorful charts.

When a startup shows a spreadsheet of real user comments gathered from neutral platforms, the due-diligence process speeds up. Venture partners no longer need to schedule extra calls with reference customers; the feedback is already public. That transparency builds credibility, especially for first-time founders who lack past exits to bolster their story.

Moreover, reviews can reveal product stickiness. If multiple people say they replaced an older tool and never looked back, investors see a moat forming. On the flip side, a pile of feature requests may hint at upsell opportunities. Either way, the data lets investors predict lifetime value and make smarter bets.

Identifying Product-Market Fit Through Patterns

Finding product-market fit in AI is tricky because models can solve many problems at once. Reviews act like colored pins on a map, showing where the solution truly shines. If the majority of comments come from e-commerce managers praising image tagging accuracy, the team learns that retail is their strongest beachhead, even if the original vision targeted healthcare.

By clustering review themes, founders can build a heat map of pains solved. Natural language processing tools make this even faster: they group comments about speed, cost, or accuracy automatically. When “setup simplicity” bubbles to the top, marketing crews know which benefit to spotlight on landing pages.

Patterns also warn when fit is fading. A spike in complaints about model drift signals that the training data no longer reflects reality. Catching that early prevents churn. Because these insights emerge from unsolicited opinions rather than curated interviews, they often reveal truths customers might hesitate to share in a sales call.

Building Trust With Enterprise Prospects

Large companies move carefully, especially when algorithms might influence hiring, pricing, or medical decisions. Procurement teams therefore search the web for unbiased opinions before scheduling a proof of concept. A startup that already accumulates dozens of thoughtful reviews looks safer to a risk-averse buyer than one that only offers a glossy brochure.

Trust goes beyond star ratings. Decision makers read tone, context, and responder follow-up. When they see founders thanking reviewers and outlining fixes, they infer a culture of accountability. Conversely, silence under a negative comment raises a red flag that support may be lacking after the contract is signed.

Proactive founders use this dynamic to their advantage. They encourage pilot users to publish detailed case studies on independent platforms. Those stories then act as social proof during long sales cycles. The result? Shorter security reviews, fewer legal hurdles, and faster purchase orders—all because strangers vouched for the product in public.

Guiding Product Roadmaps With Real Voices

Engineers often guess which feature to build next based on gut feelings or competitor chatter. Third-party reviews turn that guessing game into a clear to-do list. When a dozen users mention throttling limits during peak traffic, capacity planning jumps to the top of the backlog. When reviewers rave about a time-saving API wrapper, doubling down on that module makes sense.

Because reviews are timestamped, they also highlight shifting priorities. A surge in comments about data residency in the past month may reflect new regulations or customer awareness. Product managers can spot the trend early and schedule compliance tasks before they morph into deal breakers.

Importantly, reviews offer language straight from the audience’s mouth. Marketing teams can lift phrases like “plug-and-play AI” or “explainable dashboards” and echo them in ads, documentation, and investor updates. Using customer words not only improves search ranking but also reassures future buyers that the startup truly listens.

Strengthening Recruitment Efforts

Scaling requires talent, yet top engineers are picky about where they land. Before accepting an offer, many candidates scan review sites to gauge a startup’s momentum and culture. Public praise for robust documentation or responsive support signals a place that values craftsmanship and user success.

Recruiters can weave these independent comments into outreach emails. Instead of generic claims, they highlight how reviewers praised the model’s fairness or the team’s quick bug fixes. Concrete proof makes the opportunity stand out among the sea of offers from large tech firms.

Reviews that highlight a clear mission—such as cutting carbon footprints or democratizing data—also attract values-driven engineers. Mentioning those authentic snippets during interviews can sway undecided candidates, because belonging to a purposeful project sometimes matters more than extra stock options.

It also assures potential hires that the company embraces transparency, a value closely tied to open research and ethical AI development.

Facing the Risks of Fake or Biased Reviews

Of course, not every star rating shines with honesty. Competitors may plant negative remarks, and overly eager fans may inflate positives. AI startups must watch for such distortions so that strategic decisions rest on solid ground.

One safeguard is volume. A larger pool of feedback dilutes the impact of any single outlier. Another is verification: some platforms tag reviews posted by authenticated customers or link them to transaction IDs. Founders can favor these sources when compiling dashboards. Platform owners monitor the landscape, yet founders must remain vigilant themselves.

Teams should also apply basic sentiment analysis on their own data. Sudden spikes of identical wording often indicate coordinated manipulation. When questionable entries appear, reaching out publicly to request clarification shows the community that the company takes integrity seriously.

Finally, transparency about how reviews are collected fosters trust. Publishing a short policy that discourages incentives or scripted responses keeps the playing field fair. Honest reviews, whether glowing or critical, remain the most valuable compass for scaling decisions.

Turning Review Insights Into Scalable Processes

As the user base grows, manually reading every comment becomes impossible. Successful AI startups therefore turn review analysis into a repeatable process. They pipe incoming ratings into dashboards, classify them by topic, and assign each cluster to a responsible team.

Customer success tracks “setup issues,” engineering owns “performance bugs,” and product management monitors “new feature requests.” Weekly or bi-weekly meetings ensure nothing falls through the cracks. Over time, recurring themes feed into a public changelog, letting users see that their voices directly influence development.

Automation does not erase the human touch. A short, personalized reply to a critical review can transform a skeptic into an advocate. The act signals that behind the cutting-edge model stands a team that cares. When the company later scales customer support with chatbots and knowledge bases, the habit of prompt engagement remains baked into the culture.

Just as important, leadership reviews quarterly trends to guide resource allocation. If sentiment around response time improves after upgrading GPUs, the executive team knows the investment paid off. Conversely, if privacy concerns linger, they can prioritize hiring a compliance officer before pushing into new regions.

In this way, the humble user review evolves from anecdote to actionable KPI.

Launching an AI startup can feel like standing at the edge of a deep pool. The code may be clever, the training data rich, and the demo dazzling, yet founders still wonder whether people will trust what they have built. Before pouring more time and money into new hires, bigger clouds, and global marketing, most young companies seek an outside signal that says, “Yes, this product works for real users.”

For that reason, third-party review sites have become a secret weapon. A single page of authentic feedback can highlight strengths, expose blind spots, and calm the nerves of cautious investors. While browsing feedback, many founders stumble upon resources such as seoelinks reviews, where practitioners share blunt stories about search visibility tools. Reading how another team handled growth pains gives founders a mirror for their own roadmap. The habit of studying neutral opinions early, and often, can shape smarter scaling moves down the road.

Why Early Validation Matters for AI Startups

AI products behave differently from ordinary software. They learn from data, change over time, and sometimes surprise even their own makers. That unpredictability makes outside validation more important than slick branding. Third-party reviews offer validation because they come from people with nothing to lose by being honest.

If a sentiment-analysis API mislabels sarcasm, a reviewer will say so. If a computer-vision app saves a warehouse thousands of dollars, the reviewer will brag about it. Patterns inside these stories help founders detect whether their minimum viable model is truly ready for a wider audience or still too fragile for scale.

Data scientists inside a seven-person startup already juggle model tuning, infrastructure costs, and customer questions. Digging through independent reviews may feel like extra work, yet it saves time in the long run. Teams can spot recurring complaints, fix them early, and prevent a flood of support tickets later. In short, early validation through reviews offers a low-cost, high-resolution snapshot of real-world performance.

How Third-Party Feedback Eases Investor Doubt

Pitch decks often swirl with technical jargon: attention heads, differential privacy, reinforcement learning. While investors appreciate cutting-edge terms, what they really want is proof that the market cares. Independent reviews give that proof in a language anyone can understand. A five-star rating that mentions “cut onboarding time in half” speaks louder than a dozen colorful charts.

When a startup shows a spreadsheet of real user comments gathered from neutral platforms, the due-diligence process speeds up. Venture partners no longer need to schedule extra calls with reference customers; the feedback is already public. That transparency builds credibility, especially for first-time founders who lack past exits to bolster their story.

Moreover, reviews can reveal product stickiness. If multiple people say they replaced an older tool and never looked back, investors see a moat forming. On the flip side, a pile of feature requests may hint at upsell opportunities. Either way, the data lets investors predict lifetime value and make smarter bets.

Identifying Product-Market Fit Through Patterns

Finding product-market fit in AI is tricky because models can solve many problems at once. Reviews act like colored pins on a map, showing where the solution truly shines. If the majority of comments come from e-commerce managers praising image tagging accuracy, the team learns that retail is their strongest beachhead, even if the original vision targeted healthcare.

By clustering review themes, founders can build a heat map of pains solved. Natural language processing tools make this even faster: they group comments about speed, cost, or accuracy automatically. When “setup simplicity” bubbles to the top, marketing crews know which benefit to spotlight on landing pages.

Patterns also warn when fit is fading. A spike in complaints about model drift signals that the training data no longer reflects reality. Catching that early prevents churn. Because these insights emerge from unsolicited opinions rather than curated interviews, they often reveal truths customers might hesitate to share in a sales call.

Building Trust With Enterprise Prospects

Large companies move carefully, especially when algorithms might influence hiring, pricing, or medical decisions. Procurement teams therefore search the web for unbiased opinions before scheduling a proof of concept. A startup that already accumulates dozens of thoughtful reviews looks safer to a risk-averse buyer than one that only offers a glossy brochure.

Trust goes beyond star ratings. Decision makers read tone, context, and responder follow-up. When they see founders thanking reviewers and outlining fixes, they infer a culture of accountability. Conversely, silence under a negative comment raises a red flag that support may be lacking after the contract is signed.

Proactive founders use this dynamic to their advantage. They encourage pilot users to publish detailed case studies on independent platforms. Those stories then act as social proof during long sales cycles. The result? Shorter security reviews, fewer legal hurdles, and faster purchase orders—all because strangers vouched for the product in public.

Guiding Product Roadmaps With Real Voices

Engineers often guess which feature to build next based on gut feelings or competitor chatter. Third-party reviews turn that guessing game into a clear to-do list. When a dozen users mention throttling limits during peak traffic, capacity planning jumps to the top of the backlog. When reviewers rave about a time-saving API wrapper, doubling down on that module makes sense.

Because reviews are timestamped, they also highlight shifting priorities. A surge in comments about data residency in the past month may reflect new regulations or customer awareness. Product managers can spot the trend early and schedule compliance tasks before they morph into deal breakers.

Importantly, reviews offer language straight from the audience’s mouth. Marketing teams can lift phrases like “plug-and-play AI” or “explainable dashboards” and echo them in ads, documentation, and investor updates. Using customer words not only improves search ranking but also reassures future buyers that the startup truly listens.

Strengthening Recruitment Efforts

Scaling requires talent, yet top engineers are picky about where they land. Before accepting an offer, many candidates scan review sites to gauge a startup’s momentum and culture. Public praise for robust documentation or responsive support signals a place that values craftsmanship and user success.

Recruiters can weave these independent comments into outreach emails. Instead of generic claims, they highlight how reviewers praised the model’s fairness or the team’s quick bug fixes. Concrete proof makes the opportunity stand out among the sea of offers from large tech firms.

Reviews that highlight a clear mission—such as cutting carbon footprints or democratizing data—also attract values-driven engineers. Mentioning those authentic snippets during interviews can sway undecided candidates, because belonging to a purposeful project sometimes matters more than extra stock options.

It also assures potential hires that the company embraces transparency, a value closely tied to open research and ethical AI development.

Facing the Risks of Fake or Biased Reviews

Of course, not every star rating shines with honesty. Competitors may plant negative remarks, and overly eager fans may inflate positives. AI startups must watch for such distortions so that strategic decisions rest on solid ground.

One safeguard is volume. A larger pool of feedback dilutes the impact of any single outlier. Another is verification: some platforms tag reviews posted by authenticated customers or link them to transaction IDs. Founders can favor these sources when compiling dashboards. Platform owners monitor the landscape, yet founders must remain vigilant themselves.

Teams should also apply basic sentiment analysis on their own data. Sudden spikes of identical wording often indicate coordinated manipulation. When questionable entries appear, reaching out publicly to request clarification shows the community that the company takes integrity seriously.

Finally, transparency about how reviews are collected fosters trust. Publishing a short policy that discourages incentives or scripted responses keeps the playing field fair. Honest reviews, whether glowing or critical, remain the most valuable compass for scaling decisions.

Turning Review Insights Into Scalable Processes

As the user base grows, manually reading every comment becomes impossible. Successful AI startups therefore turn review analysis into a repeatable process. They pipe incoming ratings into dashboards, classify them by topic, and assign each cluster to a responsible team.

Customer success tracks “setup issues,” engineering owns “performance bugs,” and product management monitors “new feature requests.” Weekly or bi-weekly meetings ensure nothing falls through the cracks. Over time, recurring themes feed into a public changelog, letting users see that their voices directly influence development.

Automation does not erase the human touch. A short, personalized reply to a critical review can transform a skeptic into an advocate. The act signals that behind the cutting-edge model stands a team that cares. When the company later scales customer support with chatbots and knowledge bases, the habit of prompt engagement remains baked into the culture.

Just as important, leadership reviews quarterly trends to guide resource allocation. If sentiment around response time improves after upgrading GPUs, the executive team knows the investment paid off. Conversely, if privacy concerns linger, they can prioritize hiring a compliance officer before pushing into new regions.

In this way, the humble user review evolves from anecdote to actionable KPI.

Smart SEO,

Faster Growth!

Most Read Articles

Generative Engine Optimization (GEO)

Learn how Generative Engine Optimization (GEO) helps your content rank in AI search engines like ChatGPT and Google AI. This comprehensive guide explains the differences between SEO and GEO, why it matters for your business, and practical steps to implement GEO strategies for better visibility in AI-generated responses.

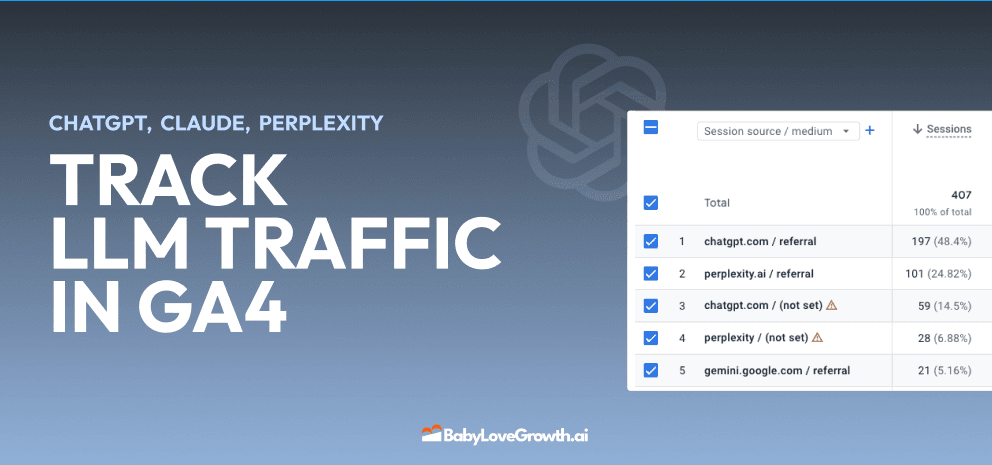

Track LLM Traffic in Google Analytics 4 (GA4)

Learn how to track and analyze traffic from AI sources like ChatGPT, Claude, Perplexity, and Google Gemini in Google Analytics 4. This step-by-step guide shows you how to set up custom filters to monitor AI-driven traffic and make data-driven decisions for your content strategy.

How to Humanize AI Text with Instructions

Learn practical techniques to make AI-generated content sound more natural and human. This guide covers active voice, direct addressing, concise writing, and other proven strategies to transform robotic text into engaging content.

Open AI Revenue and Statistics (2024)

Comprehensive analysis of OpenAI financial performance, user engagement, and market position in 2023. Discover key statistics including $20B valuation, $1B projected revenue, and 100M+ monthly active users.